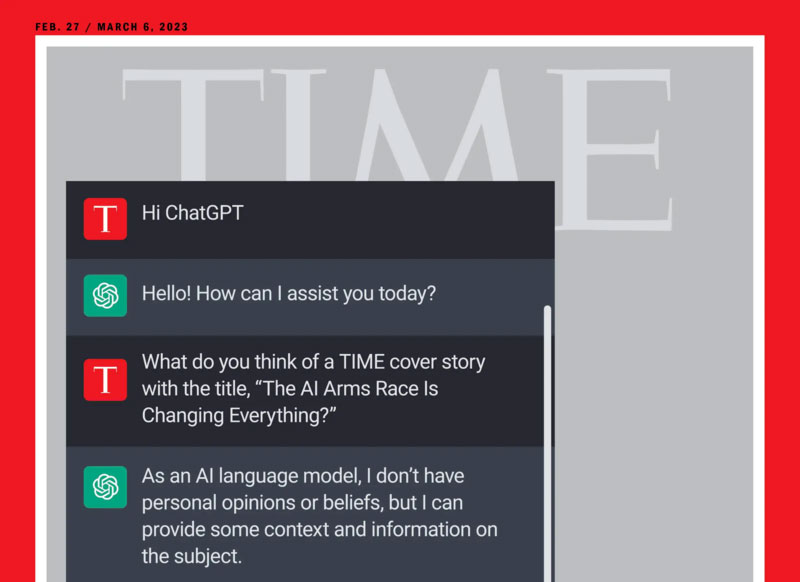

Near the end of 2022, OpenAI, Inc., made the beta version of its ChatGPT product available to the public. This chatbot, powered by “artificial intelligence” (a misnomer, as we’ll see later), promised to produce texts in response to the user’s prompts, spitting out prose on any topic requested, in any style, and of any length.

Get a free sample proofread and edit for your document.

Two professional proofreaders will proofread and edit your document.

This, we were told, was going to put professional writers and editors out of business; surely those eggheads’ reign of terror was at an end, now that technology had put the tools to create Real Writing into the hands of Regular People. Soon those overpaid pencil-pushers would all be sorry! (I’m exaggerating the tone somewhat, but less than you might think.)

Nine months into this alleged AI revolution, the rhetoric has quieted. Certainly, people continue to use ChatGPT for all kinds of purposes. But human writers and editors are still going strong and still showing their value. Indeed, at the time of this writing an ongoing strike by the Writers’ Guild is making Hollywood film and TV producers very nervous.

The AI boom, like so many technological revolutions before it, is looking a bit like a bust.

To understand how AI text has failed to live up to expectations, we must first understand that, despite their name, there’s nothing truly “intelligent” about any of these products. They are what is known in computer science as large language models (LLMs), programs that generate text based on probability. These programs don’t actually create anything in any meaningful sense.

Millions of pages of text are entered into a program in a process called “training,” and then they string words together within a user-defined context, based on the likelihood that one given word will follow another. It’s effectively just a more sophisticated version of the predictive text (i.e., autocorrect) in your phone’s messaging application, distinguished mainly by the fact that it’s trained on a much, much larger body of writing.

(Your phone’s predictive function is trained on a relatively small corpus because it’s expected to be used only in limited circumstances, generally with simple language; so it’s fine for, “Pick you up in 10 minutes,” “Meet me at the diner on East Main Street,” or even “Hey girl u up,” but as your language gets more, um, colorful, the results can be pretty ducking hilarious. But I digress.)

The problem with LLMs, even those trained on stupendously huge datasets, is that they are entirely probabilistic. That is, they put words together based on what seems linguistically likely, not on what is factually correct, which makes them no substitute for human research. In one high-profile case, a lawyer was fined $5,000 for submitting a legal brief generated by ChatGPT; the giveaway was that the brief cited a case that never existed.

Computer scientists refer to these non-facts rather charmingly as “hallucinations,” which implies that they are a malfunction of the program. In fact, they show the system is working as intended. An LLM is designed to make its statements with an authoritative tone with no regard for their truth or falsehood. It has no expertise in any subject matter; it knows nothing of history, or politics, or literature, or law, or anything else. Its only function is to approximate correct grammar, syntax, and usage to assemble sentences that seem plausibly human.

But months into this large-scale experiment, the results are actually getting worse. One study showed that within just three months, ChatGPT’s accuracy rate in answering a simple mathematics-based question fell precipitously. OpenAI has not released the chatbot’s source code, so the mechanism driving this decline is unknown, but a feedback loop is a strong possibility.

The LLM, you see, is initially trained on a dataset of texts created by humans—often obtained and processed using exploitative labor practices, but that’s another story—but as it continues to operate it is continuously being trained on new text, mostly scraped from the web. But with more and more web-based text itself being created using chatbots, the LLM is being fed a steady diet of its own fact-free, surface-level plausible text. If there’s a universal truth in computer science, it’s “garbage in, garbage out”; so it is with generative text. The results will be ever less robust as subsequent generations of data become hopelessly inbred.

That’s precisely the opposite of how feedback works with human editing. One of my proudest moments here at ProofreadingPal came when I served as lead proofreader on a couple of pieces of short fiction by a novice writer. Though the first story that came across my desktop wasn’t very well written, I thought it had a lot of heart and a few good lines. I made some detailed notes, pointing out the stuff I liked but also noting the weakness of the ending, suggesting that it would be more effective to imply the story’s theme rather than spelling it out.

I thought no more about it until a few months later, when another story by the same client hit my work queue. To my delight, the writing was much improved; the writer had built upon the strengths I had noticed and worked to compensate for their weaknesses. Best of all, the ending was subtle and restrained, achieving genuine poignancy for what it left unsaid. I fixed a few lingering errors and left some positive notes, complimenting the author on their progress. And a while after that, the client sent us a note informing us that this second short story had won a local literary prize.

Today, something like 80 percent of the traffic on the internet is computers talking to other computers. Most online text will never be read by human beings, neither is it meant to be. But know this: if you’re writing something meant for humans to read, it’s best to have humans involved in its creation at all steps in the process.

That’s a bit of common sense that beats “artificial intelligence.”

Jack F.

Get a free sample proofread and edit for your document.

Two professional proofreaders will proofread and edit your document.

Get a free sample proofread and edit for your document.

Two professional proofreaders will proofread and edit your document.

We will get your free sample back in three to six hours!